100% Pass Cisco, PMP, CISA, CISM, AWS Dumps on SALE!

Get Now

-8Days -18:-42:-4

X

How to achieve faster multi-threaded download file speed

Why is it faster (multi-threaded) to download a large file from the Internet? I checked online and said that "it is because of io blockage, because the network speed must be faster than cpu, single-threaded single-io channel, and multi-threaded multi-io channel". I understand what he means: single-threaded because of slow network speed can't keep up with the processing speed of cpu, so it causes a lot of congestion, and multi-threaded multi-io channel, so the congestion is reduced. My question is: Will the io channel keep increasing as the number of threads increases for the same multi-core processor? Is the speed of one io channel on a single line the same as each io channel in multithreading? Why can't a single thread improve the speed by increasing the speed of the io channel? Is it because the io channel is a piece of hardware? Is its speed limited by the hardware? If the io channel is hardware, is the maximum number of io channels of a processor equal to the number of cores? If it is really what I guessed above, then can you think that if it is a single-core processor, downloading large files by multi-threading through time slices cannot be faster?

The ultimate factor that determines the speed at which users download large files is the size of the network bandwidth that users download in real time. Other factors are negligible compared to it.

Real-time maximum available bandwidth

Any process that communicates with the Internet theoretically has a real-time maximum available bandwidth, which is an objective existence and does not shift from the user's will.

in case

Bandwidth preempted by user processes in real time = available network bandwidth

That is the most ideal. The user process uses 100% of the network bandwidth. Whether the process is single-threaded or multi-threaded, there is almost no difference in download speed.

Ideals are plump, but reality is skinny because:

Bandwidth preempted by user processes in real time !! !!

In this case, if the bandwidth that the user process can preempt in real time is infinitely close to the real-time network available bandwidth, it is also perfect. But what is the real-time network bandwidth?

Nobody knows! The real-time network available bandwidth changes every moment!

The operating system is willing to work for users. TCP uses the traffic detection mechanism to continuously detect the real-time network available bandwidth and match (equivalent) the real-time sending rate. This operation looks beautiful!

Why do you say that?

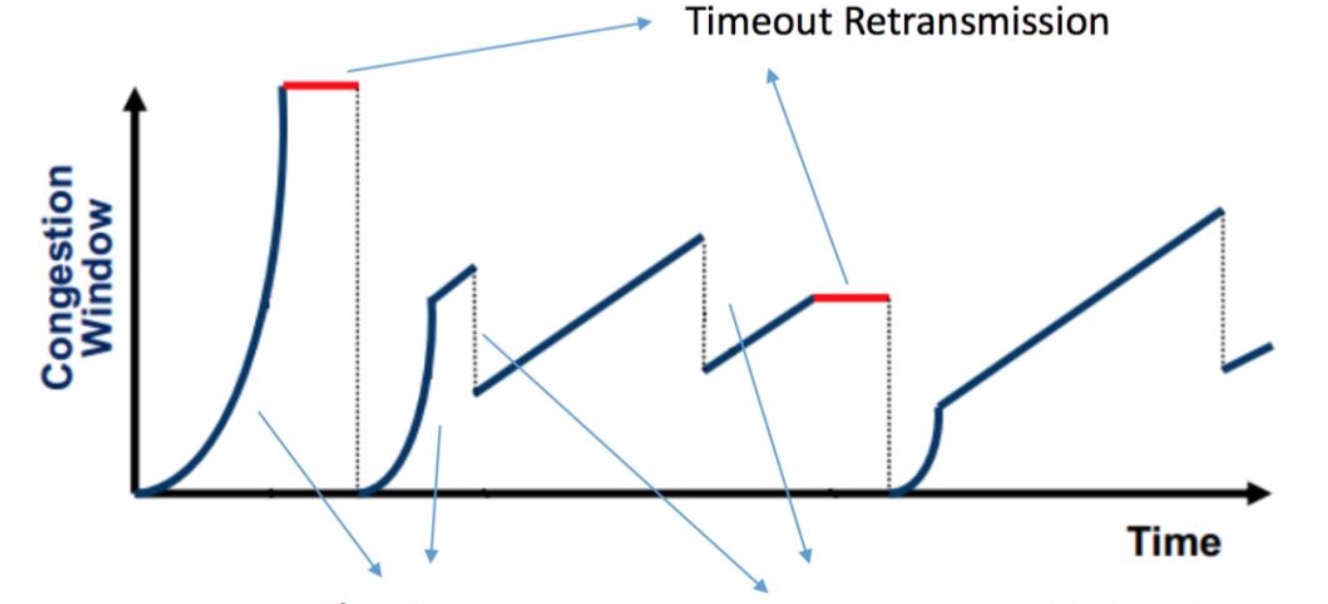

The traditional TCP traffic detection mechanism has a very fatal flaw: once packet loss is detected, the sending rate is immediately reduced to 1/2. After the speed is reduced by 1/2, if there is no packet loss, the transmission rate will be increased according to a fixed increase value (linear growth) based on the 1/2 rate. It will then continue to reach the moment of packet loss (real-time available bandwidth) in this rhythm. Then reduce speed by 1/2 again and again and again until the file download ends. If there is still packet loss in the next detection cycle, the speed will continue to decrease by 1/2 based on the current 1/2 rate. And so on for the rest of the storyline.

Obviously, exponential speed reduction and linear growth are very unfair! The speed is fast, but the speed is long! The direct consequence is that the real transmission rate is much smaller than the real-time available bandwidth.

Multi-threaded vs single-threaded

The advantage of multiple threads over single threads is that there are multiple threads competing for real-time available bandwidth. Although multithreading is logically parallel, it is still processed serially in time. So the stage at which each thread is inconsistent.

At any time, some threads are penalized for 1/2 drop in packet loss, some threads are in the 2x speed increase phase (SlowStart), and some threads are in the linear growth phase. Through the weighted average of the download rates of multiple threads, a relatively smooth download curve is obtained. This smooth curve should be above the single-threaded download rate most of the time. This is a manifestation of the advantages of multi-threaded download speeds.

However, if the TCP traffic detection mechanism is more intelligent, such as the BBR algorithm. The biggest improvement of the BBR algorithm is to abandon the traditional TCP traffic scheduling algorithm (up or down based on whether packets are lost). BBR adopts to measure the maximum available bandwidth of the network in real time and match the sending rate with it. Hovering in a small area near the real-time available bandwidth to avoid large fluctuations. The measurement rate can be infinitely close to the real-time available bandwidth, and the advantages of multi-threading are not reflected compared to single-threading.

The above is the news sharing from the PASSHOT. I hope it can be inspired you. If you think today' s content is not too bad, you are welcome to share it with other friends. There are more latest Linux dumps, CCNA 200-301 dumps and CCNP Written dumps waiting for you.

Cisco Dumps Popular Search:

ccnp switch 300-115 cbt nuggets free download ccie service provider lab topology ccnp tshoot hsrp track ccna introduction to networks what is ccna 200-301 volume 2 all about ccna exam material new ccna 200-301 exam cost spoto ccie r&s lab dumps ccna2 gene ccna combined exam

Copyright © 2025 PASSHOT All rights reserved.